[[TOC]]

S3 Security (Resource Policies and ACLs)

Bucket Policies:

- Are a form of resource policy - what that resource can access, not a user.

- Allow or deny access from same or different accounts.

- Allow or deny access from Anonymous principals - can grant anonymous access (public without logging in)

- adds in the principal to the Statement in the policy itself, which means that we can then set that to allow or deny a certain principal to this S3 bucket. This is different from the identity policy.

- if the principal is in the statement, it's a resource policy.

Policy Explanation

{

"Version": "2012-10-17", ## The policy version

"Id": "S3PolicyId1", ## Policy ID

"Statement": [ # start of the statement block

{

"Sid": "IPAllow", # the identifier of this statement

"Effect": "Deny", # allow or deny

"Principal": "*", # the principal that we are allowing or denying, in this case, it's Denying All.

"Action": "s3:*", # any actions on this bucket (get, put, etc)

"Resource": [

"arn:aws:s3:::DOC-EXAMPLE-BUCKET", # This is the specific part of the S3 bucket. Can be certain objects or "folders" if you will.

"arn:aws:s3:::DOC-EXAMPLE-BUCKET/*"

],

"Condition": { # if this condition is true - source IP has to be this IP

"NotIpAddress": {"aws:SourceIp": "1.3.3.7/24"}

}

}

]

}

Different policies allow or deny

ACLs

- Don't use these, use bucket policies instead.

- significantly less flexible than bucket policy

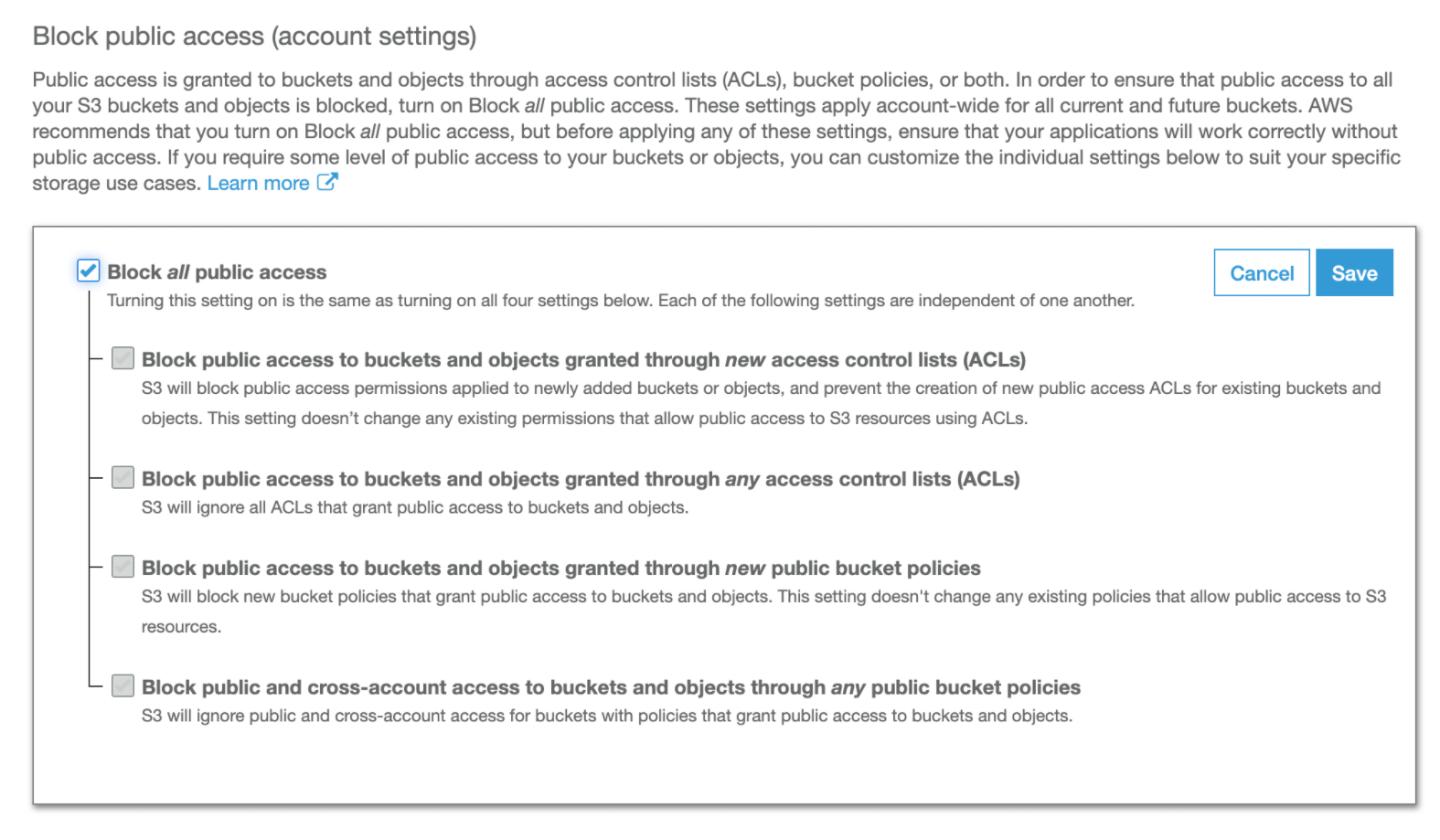

Block Public Access

Documentation This is a 3rd level of security that applies no matter what the bucket policies say. These ONLY affect public and anonymous access requests.

- Block ALL public access

a. Does exactly what it says. Blocks all public and anonymous access across the complete S3 bucket. - Block public access to buckets and objects granted through new access control lists:

a. Doesn't change any existing permissions, but applies to any new ones that are created. - Block public access to buckets and objects granted through ANY access control lists

a. blocks all ACLs - Block public access to buckets and objects granted through NEW public bucket or access policies

a. This one doesn't change any existing permissions on the bucket or access policies, but applies to any new ones that are created. - Block public and cross-account access to buckets and objects through ANY public bucket policies a. blocks bolth existing AND new policies from accessing the bucket.

Tips:

- Know where you are trying to allow or blocking access from.

Identity

- Controlling different resources

- Same account

- Preference for IAM

Bucket

- just controlling the S3 bucket

- anonymous or cross-account.

- Locks down the buckets by default

ACL's

- Dont use ACLs - ever.

S3 Static Hosting

- Normal access to S3 is using the AWS APIs.

- Static website hosting allows access via HTTP and using index and error documents via html documents.

- When this is set up for static website hosting, a website endpoint (DNS name) is created.

- Not for dynamic apps or webpages.

- custom domains must match the S3 Bucket name.

Two scenarios for S3 static website hosting:

Offloading

- good for offloading and out of band pages. Images can be offloaded to S3 and the database can reach into S3 for that image.

Out of Band

- Error pages for a dynamic app might be pointed to S3. Maintenance pages as well.

- Change the DNS record to failover to this. Can also set to automatically change as the result of a failed health check.

Pricing

- per month

- transfer in is free.

- transfer data out there is a per gb charge.

- charged per 1000 operations

Demo: Creating Static Website with S3

Demo

Object Versioning and MFA Delete

Versioning

Object versioning is set at the bucket level

- cannot disable this once it is enabled

- can suspend it, but not disabled it.

- Disabled -> Enabled -> Suspended

Allows you to store multiple versions of objects in the bucket Introduces the concept of an ID to the object.

- 1st instance of the object might be 11111, but uploading another one to overwrite may create a new version called 22222. You can explicitly point to a specific version of the file if needed.

- The pointer to the latest version or current version points to the newest ID.

No deletion, but instead it sets the Delete Marker on a new version of that object.

- Wen you delete an object, this then just says, hey, you can't access this version because it says it's been deleted.

- If you want to restore this object, you delete the delete marker.

Tips:

- cannot disable, again, this is important to remember.

- space is consumed for all of the versions. So if you have 10 versions of a 10gb file, you have 100gb of space taken up and billed for.

MFA Delete

Enabled in the versioning configuration

- MFA is required to change bucket versioning state

- MFA is then required to delete versions.

- MFA serial and the token passed with the API calls if you're using the CLI

Demo: S3 Versioning

Demo

S3 Performance Optimization

Multipart Upload

Uploads occur using the single stream using the PutObject API Requires a full restart of the stream, so if you've uploaded 9.994gb of 10, you'll need to restart it all.

- minimum size is 100mb

- maximum can be 10000 parts.

- no use for single part upload over 100mb anyways.

- data parts are broken up

- individual parts can fail and then those parts can be restarted.

- transfer rate is the speed of all the parts

S3 Accelerated Transfer

This is used to find the closest geographic location to where the uploader is by using AWS's own Edge locations

- bucket name cannot have periods

- it finds the edge location and then gets it into the AWS network and then uses high speed internal networks to get it to the region that the S3 is in.

Demo: S3 Performance

Demo

Theory: Encryption

Overview

Two types: Encryption at rest and encryption in transit.

At rest:

- This is the data that is stored on one device. On a laptop, on a server, in your S3 bucket.

- Helps with physical theft.

In Transit:

- This is data that leaves one place and enters another. Sending your credit card through to Amazon.com is an example.

Concepts:

- Plaintext - raw fresh data that is unencrypted.

- Algorithm - some code that is applied to the plaintext with a key to create encrypted data (ciphertext)

- Key - this is the known that is applied to the algorithm to create the encrypted data (ciphertext)

- ciphertext - encrypted data

You can go from plaintext to ciphertext by using the algorithm and the key and from ciphertext to plaintext using the algorithm and the key

The Key

Symmetric key - used to encrypt and decrypt the data. Both people need to have the same key to encrypt and decrypt.

- the key could be a password, such as

secretk3ybut you'll need to transfer this around, probably in plaintext - what if this key is compromised?

Asymmetric key - use the public key to encrypt the data but then decrypt it using the private key.

- used when two or more parties are involved

- public key is released to the world which can be used to encrypt data, but to decrypt, the private key is only used by who holds the private key.

- used by ssh or PGP.

Signing - A user can sign a file with the private key and then someone else can use the public key to verify who signed it.

Steganography - hiding data within data.

- changing colors of certain pixels in images and use an algorithm to extract this data.

Key Management Service - KMS

Overview

Allow you to create, store and manage both symmetric and asymmetric keys

- Handles cryptographic operations

- KMS keys do not leave KMS - FIPS 140.2

CMKs

Logical - contains ID, date, policy, description and the state....that is backed by physical key material Generated or imported CMKs can be used up to 4kb of data - this doesn't mean you can't encrypt a 100gb file using these CMKs

- Uses a Data Encryption key that can be used to encrypt larger than 4kb.

- KMS does not store the DEKs in any way.

- creates a link between the CMK and the DEK so that it knows which one was created and what created it.

- creates both a plaintext and a ciphertext version of that key

- encrypt using the plaintext key, then discard the plaintext key

- encrypted key is stored with the data

Concepts

- CMK's never leave the region they are created in

- AWS Managed or Customer Managed CMKs

- Customer managed keys are more configurable

- CMKs support rotation

- backing keys and all previous backing keys are stored

- Aliases can be used as well.

Key Policies

Key Policies are used on each of the CMKs. Each CMK has a key policy. Policies also enable strict role separation

- someone who encrypts may not be able to decrypt.

Example:

{

"Sid": "Enable IAM policies",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::1234567891011:root"

},

"Action": "kms:*",

"Resource": "*"

}

Object Encryption

Client-Side vs Server-Side Encryption

- simple to understand - encrypt first, then upload vs just uploading the raw data.

Types of Server Side Encryption

- Server-side encryption with Customer Provided keys (SSE-C) a. Users manage the keys, S3 manages the endpoint b. user needs to provide the key with the data to the S3 endpoint both to encrypt and to decrypt

- Server-side encryption with Amazon S3-Managed Keys (SSE-S3)

a. Users handle the data and then S3 uses a master key to create an encrypted key with the data and sends it to the S3 Storage. b. This is a bit similar to the Customer Provided keys, but S3 handles it. c. uses AES256 d. Anyone with full S3 permissions can decrypt the data. Don't give a good way to handle separation of duties. - Server-side encryption with Customer Master Keys (CMKs) stored in AWS KMS (SSE-KMS) a. this one is very similar to the SSE-S3, but introduces the requirement for a CMK b. CMKs allow for rotation and separation of duties so this also is applicable to the encryption process.

Default bucket Encryption

Send the encryption header for all uploads to this bucket to force S3 to encrypt everything.

Demo: Object Encryption

Demo

S3 Object Storage Classes

Types

S3 Standard

S3 Standard - IA - Infrequent Access

- 11 9's

- Retrieval fee.

S3 One Zone - IA

- 11 9's

- retrieval fee

- different that Standard IA because there is additional risk with the data being in one zone.

- used for long lived, non critical and replaceable data

S3 Glacier

- Same durability 11 9's

- think of this as cold objects - they require a retrieval process

- archival storage - can tolerate recovery after a couple hours

- 90 day minimum billable duration

Glacier Deep Archive

- 180 days minimal billable duration

- 12 to 48 hour restore times

S3 Intelligent Tiering - contains 4 tiers of storage -Frequent

- Infrequent

- Archive

- Deep Archive

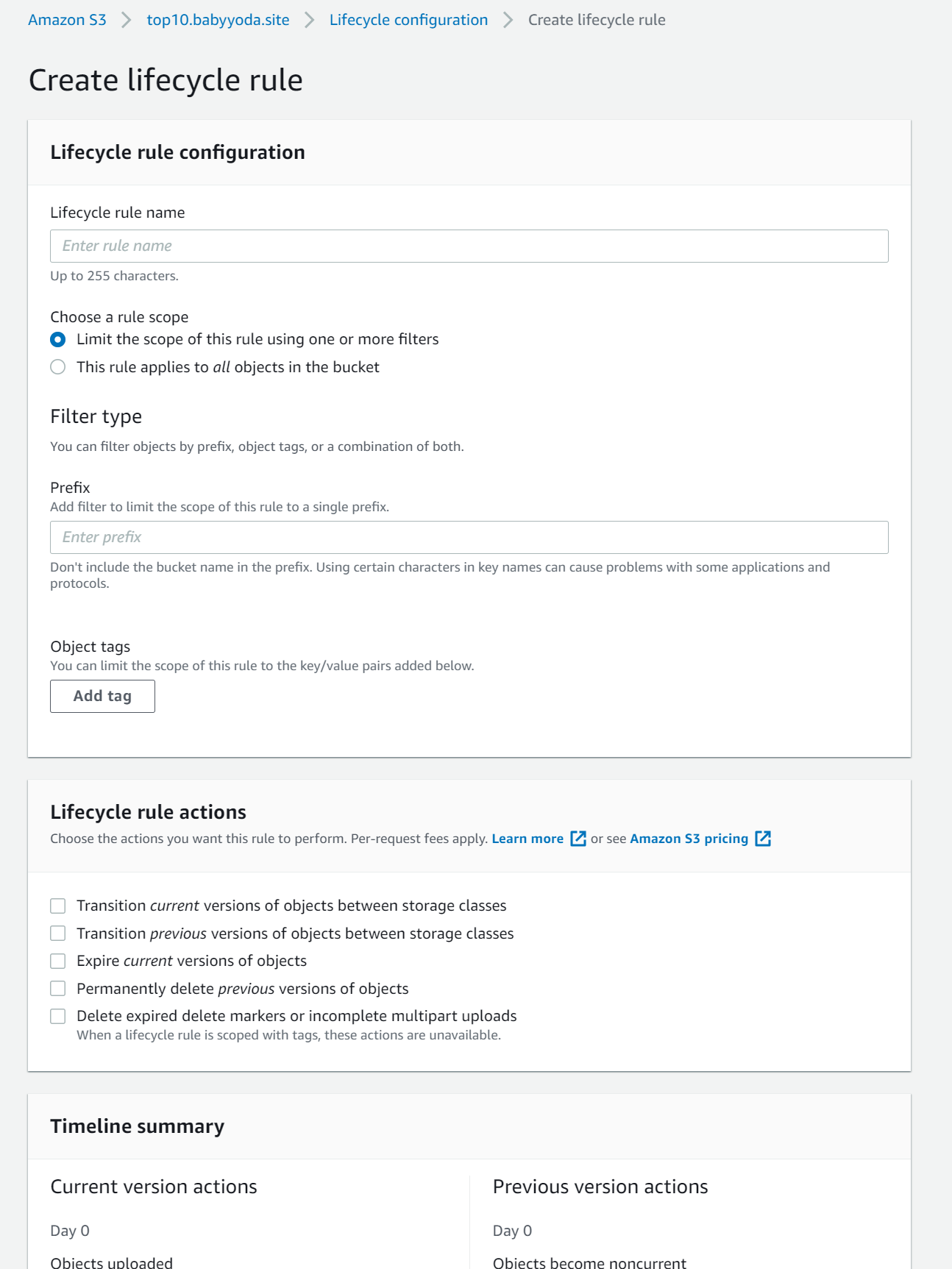

S3 Lifecycle Configuration

Lifecycle rules are a s set of rules...rules are a set of actions...on a bucket or groups of object to transition objects between S3 classes. Can also include expiration options (Older than 90 days, etc..)

Intelligent tiering can move the lifecycle through all of the types of storage classes by setting up rules.

- Example: if after 90 days, you want to move from S3 Standard IA to S3 Glacier, set the rules to to that.

- remember your minimums which can limit how quickly you move objects - probably can't go from S3 Standard to S3 Glacier after 4 days.

Quick Demo

- navigate to the S3 Console

- Click on your S3 bucket

- Navigate to your Management tab

- Click on Edit Lifecycle rules.

- Set your rules here.

S3 Replication

Types

CRR - Cross region replication SRR - Same region replication Cross account replication - needs a bucket policy on the source and destination in order to allow the two to talk to each other.

What to replicate across?

- All objects

- subset of objects - all objects in the BabyYodaS3 bucket that are in the images prefix ("folder")

What storage classes of S3 buckets?

- usually you want to replicate across the same storage types

- S3 Standard --> S3 Standard

Ownership

- Owned by the source account in the destination bucket if transferring between accounts

Replication Time Control

- 15 minutes usually between source and destination to make sure that they are in sync.

Tips:

- Not retroactive and versioning needs enabled

- not bidirectional - one way replication

- handles it unencrypted and encrypted with SSE-S3 and SSE-KMS but not SSE-C because of the control of the keys.

- source bucket owner needs permissions to objects.

- does not replicate system events and cannot replicate Glacier or Glacier Deep Archive

- deletes are not replicated.

When to use replication:

- SRR for Log aggregation

- SRR for Prod and Test sync

- SRR for resilience but keeping the data in the same region (obvi don't want to replicate with a different country)

- CRR - for global resilency

- CRR for latency reduction - closer to their location = less latency.

Demo: Cross Region Replication of an S3 Static Website

Demo

S3 Presigned URLS

Think about how we've granted access to the bucket's contents thus far. You basically need to do one of the 3 options:

- give them an AWS account

- Provide them AWS credentials

- Make the entire bucket public what if you have an online store and you sell a book for $50 and you need to provide access to that book? What if you've sold it to 5001 people? What if you store multiple books in that S3 bucket? If you have an S3 bucket and you need to let someone into it that is unauthenticated or anonymous, you can create presigned URLS and give to them for a limited range of access.

Theory:

- Upload contents to the bucket

- Generate a presigned url

- Give that url to someone to access the object.

Tips:

- can create a URL for an object you have no access to

- when using the URL, the permissions match the permissions to the identity that created it. If you're generating presigned url's with write access, know that there is the possibility that someone could damage your book

- access denied means that the user never had access or doesn't have access now.

- don't generate with a role because that URL expires with the Role.

Demo: Create and using Presigned URLS

Demo

S3 Select and Glacier Select

Can retrieve parts of S3 objects without retrieving all of the objects.

- retrieving that 5TB file takes all 5TB of data.

- Filtering on the S3 bucket side doesn't reduce this

S3 Glacier lets you use SQL like statements to retrieve parts of these file.

- these allow you to filter the data in S3 before transferring the data out.

S3 Event Notifications

Notifications can be generated when events occur in the bucket

- can be delivered to SNS SQS or Lambda functions

- object Created

- Object Delete

- Object Restore

- Object Replication

S3 Access Logging

Enable the logging on the source bucket Enable access to the S3 Log Delivery group Enable access to the S3 Log delivery group on the destination S3 bucket.